The mission of Edifice Lab is to automate the creation of immersive digital learning experiences for the builders and stewards of infrastructure. We accomplish this by developing computer vision technologies for digitizing and abstracting the built environment.

Computer vision is the subfield of artificial intelligence that trains computers to interpret visual data. In the context of the built environment, it is used to automatically monitor safety and security, evaluate efficiency, track progress, detect defects, guide robotic systems, and support remote presence of scarce expert resources. At Edifice Lab, we explore the sensors used to collect visual data, the tools used to fuse data into unified digital representations, and the algorithms available to automatically analyze these representations to extract useful insights for improving facility construction and operations.

We have resources supporting two research programs.

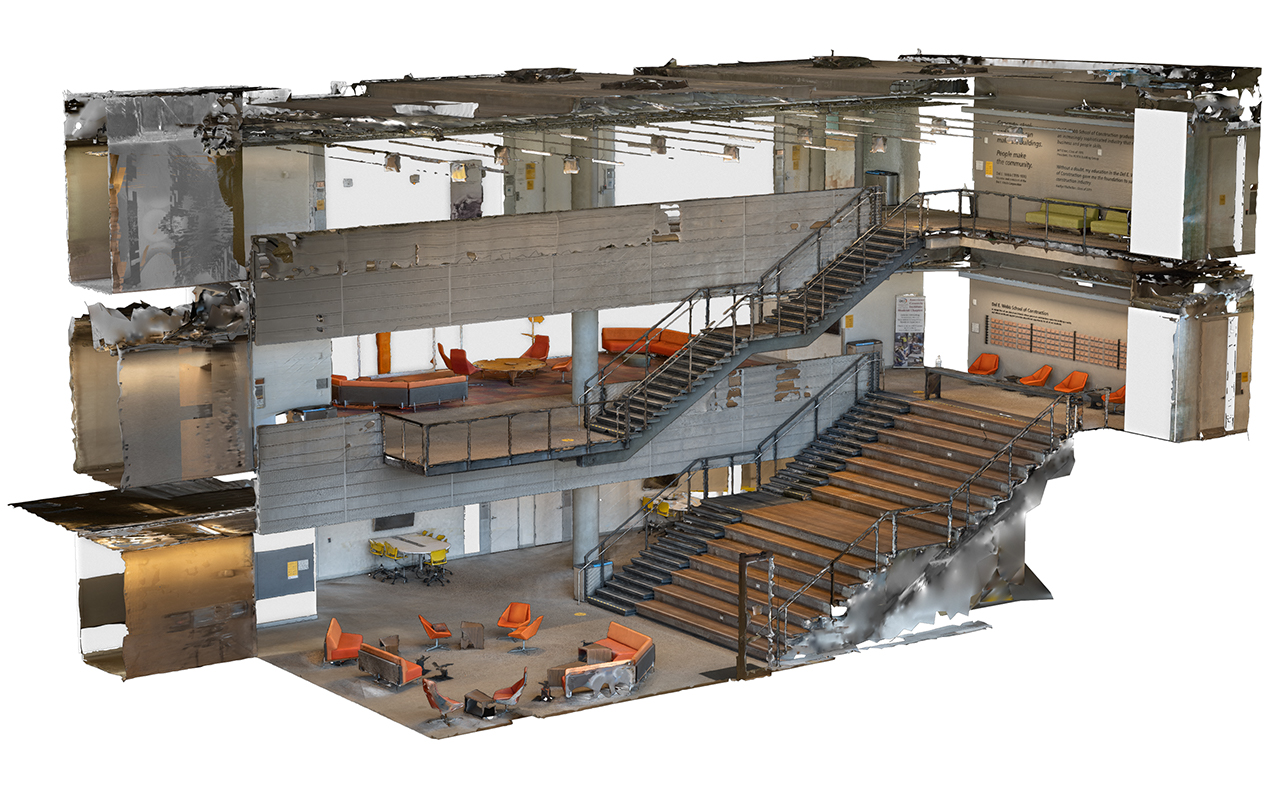

The Hype Program // Creating Hyper-Fidelity Models

Objective 1: To maximize the amount of detail contained in digital twins of the built environment, i.e. increase scope, resolution, and accuracy in spatial, temporal, and spectral dimensions.

Hype Sub-Program // Data Collection Marketplace

Objective 1.1: To orchestrate a network of cooperative vision sensors to maximize an operational transparency that does not transgress on privacy.

Key Terms: laser scanners, Lidar, mobile scanners, cameras, wearable cameras, drones, point clouds, photos, videos, photogrammetry, structure from motion, localization, sensor fusion, and big data.

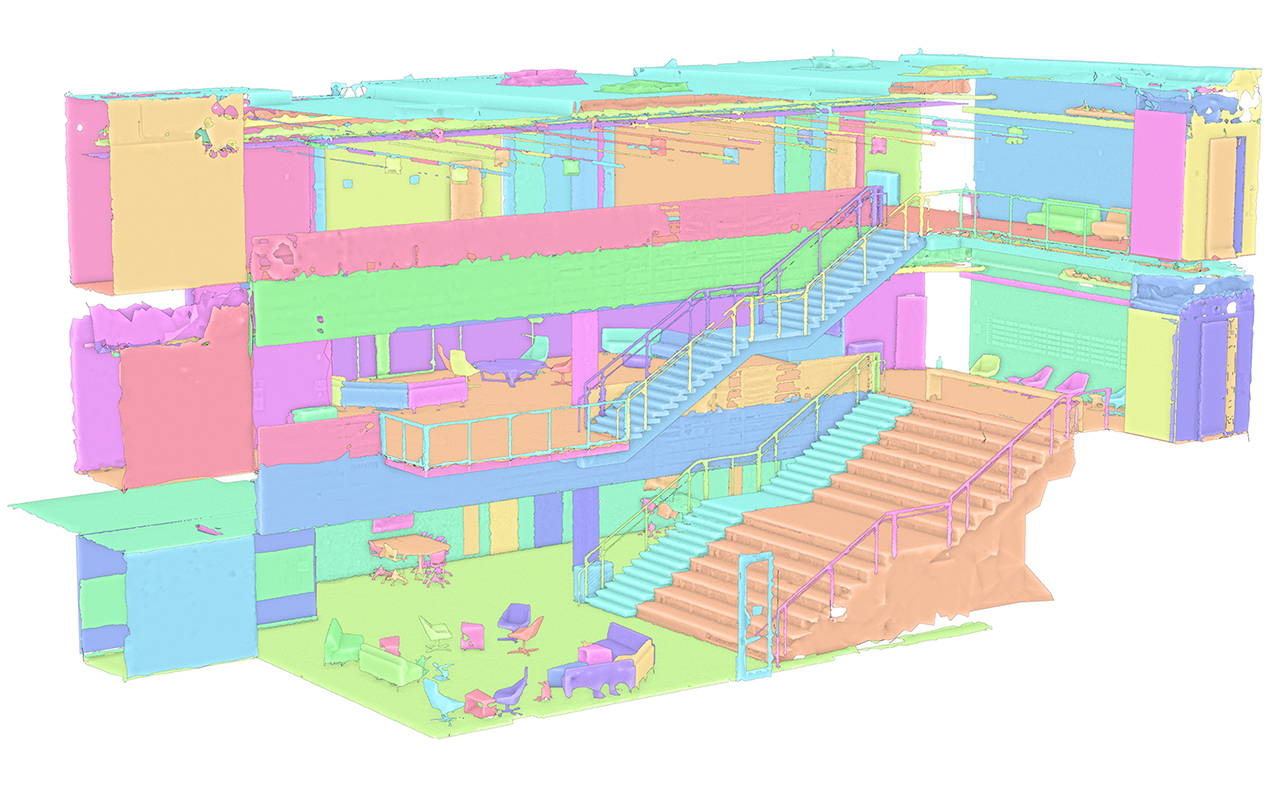

Hype Sub-Program // Data Compression and Enrichment

Objective 1.2: To convert collected data into a format that maximizes interoperability with common commercial modeling tools and game engines.

Key Terms: semantic segmentation, machine learning, scene understanding, artificial neural networks, deep learning, convolutional neural networks, training data, and generative models.

Hype Sub-Program // Productive Model Exploration

Objective 1.3: To provide an engaging human-computer interface that maximizes exploration of information contained in hyper-fidelity models.

Key Terms: generative models, graphics, real-time immersion, information foraging, and embedding visualizations.

The Mod Program // Creating Modular Models

Objective 2: To maximize the amount of relevant information productively understood by users of hyper-fidelity models.

Mod Sub-Program // Model Structure

Objective 2.1: To impose a structure on hyper-fidelity models that minimizes the time required to retrieve novel and relevant information

Key Terms: hierarchy, change facilitation, advanced work packaging, SOLID, knowledge maps, clustering, and specialization.

Mod Sub-Program // Productive Model Exploitation

Objective 2.2: To provide an engaging human-computer interface that both minimizes interaction with irrelevant detail while maximizing productive comprehension of novel and relevant information.

Key Terms: simplicity, attention, relevant, just-in-time, standardized, entropy management, trust, search, filtering, artificial intelligence question answering, and serious games.